How often do we talk about online scams outside of breaking news? For many of us, the conversation ends as soon as the headline fades. Yet digital fraud is evolving faster than individual awareness can keep up. Phishing, impersonation, and deepfake schemes are no longer fringe crimes—they’re mainstream. So how can we, as digital citizens, keep each other informed instead of isolated? The idea of a Trusted Online Scam Prevention Hub isn’t just about technology; it’s about creating a shared space where vigilance becomes habit, not fear. Wouldn’t it be better if learning to spot scams felt as natural as checking the weather?

Defining What “Trust” Means in 2025

The word trust gets thrown around constantly online, but what does it really mean in this context? Is trust something platforms can design into their systems, or does it live in the behavior of their users? Some experts suggest that algorithmic transparency builds technical trust, while consistent community engagement builds emotional trust. Both matter. When users help each other verify suspicious links or discuss security breaches openly, they create a safety net that no automated tool can replicate. What forms of trust do you think will matter most as we move into the next generation of online interaction?

Turning Information Into Action

Reading about scams isn’t enough; people need simple, actionable steps. That’s why the hub’s first mission should be to transform awareness into daily routines. Imagine a central space where members can Explore Reliable Online Scam Prevention Tips curated by both experts and users—checklists for safe transactions, community-verified tools, and quick guides on recognizing deception. How do you think we could make these resources engaging instead of overwhelming? Maybe short weekly digests, or interactive challenges where users “test” their scam-spotting skills?

Sharing Stories That Teach, Not Scare

Everyone has a story about an almost-scam. Maybe it was a fake courier text or a convincing email from a “bank.” These stories are invaluable. They humanize data and show that anyone—no matter how careful—can be targeted. The hub could feature an anonymous storytelling wall, where users share near misses and lessons learned. Could that kind of peer-to-peer exchange reduce stigma and encourage more open conversation? And if you’ve ever reported a scam yourself, what made that experience easier—or harder—than it should’ve been?

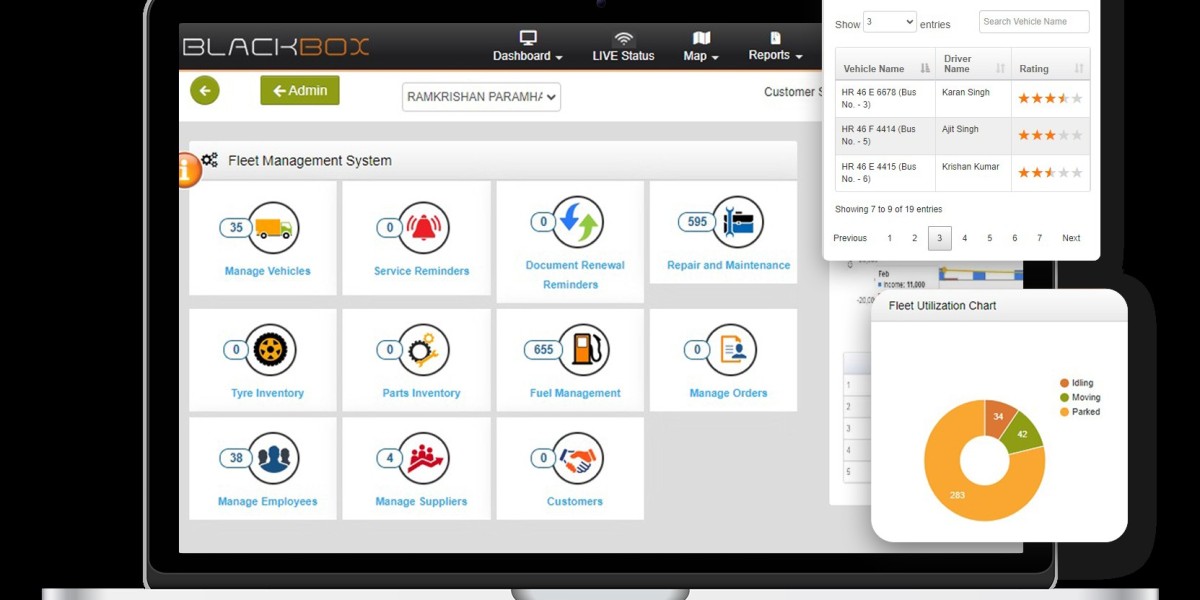

Learning from the Broader Digital Ecosystem

No community can operate in isolation. The most effective prevention hubs will borrow lessons from other data-driven industries. The betting and gaming sectors, for instance, have begun integrating behavioral analytics and real-time anomaly detection through partners like openbet. Their approach—combining predictive technology with human oversight—could easily apply to scam prevention. What would it look like if community hubs used similar tools to spot suspicious traffic or trending fraud themes before they spread? Could technology and community moderation evolve together instead of separately?

Empowering Moderators as Digital Mentors

Moderation is often seen as reactive work—removing harmful content or blocking offenders. But what if moderators became educators? A 2025 hub could transform moderators into digital mentors who explain why something is flagged, helping users understand patterns behind deception. Would that transparency make members feel more informed and less policed? It’s a question every online community should revisit. After all, empowering people to recognize manipulation themselves is far more sustainable than trying to police every post.

Bridging Generations and Access Gaps

Scams target everyone, but not everyone learns about them the same way. Younger users might recognize digital red flags instantly, while older adults could rely more on community guidance. The hub could bridge this gap through intergenerational learning—mentorship threads where digital natives share insights, and seniors contribute real-world wisdom about trust and discernment. What programs could make that collaboration feel natural rather than forced? Could gamified tutorials or reward-based learning sessions turn security awareness into something families do together?

Turning Reporting Into Collaboration

Reporting scams often feels like shouting into a void. You send a report, get an automated reply, and never hear what happens next. The new model of a trusted prevention hub should treat reporting as collaboration, not bureaucracy. When a user reports suspicious content, that data could feed into shared analytics dashboards—showing trends, repeat offenders, and prevention success stories. Transparency transforms frustration into contribution. How can communities make reporting feel rewarding, like a civic act rather than a chore?

Fostering Emotional Safety Alongside Digital Safety

Behind every scam is a victim who might feel embarrassed or ashamed. If a hub only focuses on facts and tech, it risks alienating those who need empathy most. Emotional safety means validating experiences and offering recovery resources—financial counseling, mental health support, or just a place to talk without judgment. Should scam prevention be seen as part of overall digital wellbeing, rather than a separate category of “risk management”? Perhaps healing and prevention belong in the same conversation.

A Future Built on Shared Responsibility

The vision for a Trusted Online Scam Prevention Hub 2025 isn’t about control—it’s about connection. As scams become smarter, communities must become stronger. The future lies in shared vigilance: users trading prevention strategies, experts analyzing new tactics, and technology like openbet systems detecting anomalies early. But none of it works without participation.

So, what would make you join a community like this? Would it be interactive education, a sense of belonging, or the satisfaction of protecting others? The answers to those questions will shape not only how we build safer online spaces, but how we define trust in the digital era itself.